If you a regular follower of my blog, you will probably notice I’m a bit of a storage geek. VAAI, FCoE, WWNs, WWPNs, VMFS, VASA and iSCSI are all music to my ears. So what’s new to vSphere 5.0 storage technologies? A LOT. That team must have been working over time to come up with all these great new features. Here’s a list of the high level new features, gleaned from a great VMware whitepaper that I have a link to at the end of this post.

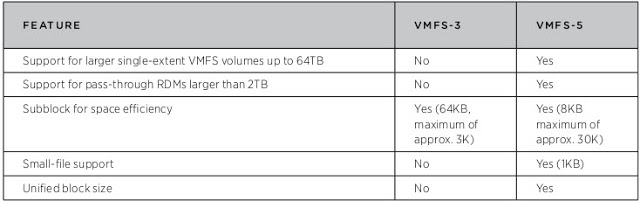

VMFS 5.0

- 64TB LUN support (with NO extents), great for arrays that support large LUNs like 3PAR.

- Partition table automatically migrated from MBR to GPT, non-disruptively when grown above 2TB.

- Unified block size of 1MB. No more wondering what block size to use. Note that upgraded volumes retain their previous block size so may want to reformat old LUNs that don’t use 1MB blocks. I use 8MB blocks, so I’ll need to reformat all volumes.

- Non-disruptive upgrade from VMFS-3 to VMFS-5

- Up to 30,000 8K sub-blocks for files such as VMX and logs

- New partitions will be aligned on sector 2048

- Passthru RDMs can be expanded to more than 60TB

- Non-passthru RDMs are still limited to 2TB – 512 bytes

There are some legacy hold-overs if you upgrade a VMFS-3 volume to VMFS 5.0, so if at all possible I would create fresh VMFS-5 volumes so you get all of the benefits and optimizations. This can be done non-disruptively with storage vMotion, of course. VMDK files still have a maximum size of 2TB minus 512 bytes. And you are still limited to 256 LUNs per ESXi 5.0 host.

Storage DRS

- Provides smart placement of VMs based on I/O and space capacity.

- A new concept of a datastore cluster in vCenter aggregates datastores into a single unit of consumption for the administrator.

- Storage DRS makes initial placement recommendations and ongoing balancing recommendations, just like it does for compute and memory resources.

- You can configure storage DRS thresholds for utilized space, I/O latency and I/O imbalances.

- I/O loads are evaluated every 8 hours by default.

- You can put a datastore in maintenance mode, which evacuates all VMs from that datastore to the remaining datastores in the datastore cluster.

- Storage DRS works on VMFS and NFS datastores, but they must be in separate clusters.

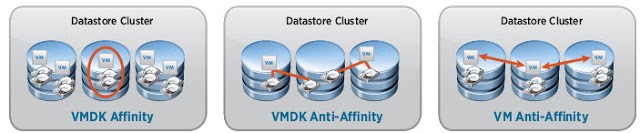

- Affinity rules can be created for VMDK affinity, VMDK anti-affinity and VM anti-affinity.

Profile-Driven Storage

- Allows you to match storage SLA requirements of VMs to the right datastore, based on discovered properties of the storage array LUNs via Storage APIs.

- You define storage tiers that can be requested as part of a VM profile. So during the VM provisioning process you are only presented with storage options that match the defined profile requirements.

- Supports NFS, iSCSI, and FC

- You can tag storage with a description (.e.g. RAID-5 SAS, remote replication)

- Use storage characteristics or admin defined descriptions to setup VM placement rules

- Compliance checking

Fibre Channel over Ethernet Software Initiator

- Requires a network adaptor that supports FCoE offload (currently only Intel x520)

- Otherwise very similar to the iSCSI software initiator in concept

iSCSI Initiator Enhancements

- Properly configuring iSCSI in vSphere 4.0 was not as simple as a few clicks in the GUI. You had to resort to command line configuration to properly bind the NICs and use multi-pathing. No more! Full GUI configuration of iSCSI network parameters and bindings.

Storage I/O Control

- Extended to NFS datastores (VMFS only in 4.x).

- Complete coverage of all datastore types, for high assurance VMs won’t hog storage resources

VAAI “v2”

- Thin provisioning dead space reclamation. Informs the array when a file is deleted or moved, so the array can free the associated blocks. Compliments storage DRS and storage vMotion.

- Thin provisioning out-of-space monitors space usage to alarm if physical disk space is becoming low. A VM can be stunned if physical disk space runs out, and migrated to another datastore, then resume computing without a VM failure. Note: This was supposed to be in vSphere 4.1 but was ditched because not all array vendors implemented it.

- Full file clone for NFS, enabling the NAS device to perform the disk copy internally.

- Enables the creation of thick disk on NFS datastores. Previously they were always thin.

- No more VAAI vendor specific plug-ins are needed since VMware enhanced the T10 standards support.

- More use of the vSphere 4.1 VAAI “ATS” (atomic test and set) command throughout the VMFS filesystem for improved performance.

I’m excited about the dead space reclamation feature, however, there’s no mention of a tie-in with the guest operating system. So if Windows deletes a 100GB file, the VMFS datastore doesn’t know it, and the storage array won’t know it either so the blocks remain allocated. You still need to use a program like sdelete to zeroize the blocks so the array knows they are no longer needed. You can check out even more geeky details at Chad Sakac’s blog here.

Hopefully VMware can work with Microsoft and other OS vendors to add that final missing piece of the puzzle for complete end-to-end thin disk awareness. Basically the SATA “TRIM” command for the enterprise. Maybe Windows Server 2012 will have such a feature that VMware can leverage.

Storage vMotion

- Supports the migration of VMs with snapshots and linked clones.

- A new ‘mirror mode’, which enables a one pass block copy of the VM. Writes that occur during the migration are mirrored to both datastores before acknowledged to the OS.

If you want to read more in-depth explanations of these new features, you can read the excellent “What’s New in VMware vSphere 5.0 – Storage” by Ducan Epping here.